191014K02 | Day 2 Lecture 1

191014K02: Day 2 Lecture 1

Vertex Cover

Input: A graph .

Task: Find such that for all edges , and minimize .

Input: A graph and .

Task: Find such that for all edges , and .

The naive algorithm by brute force — examining all possible subsets — is in damages. Can we do better?

The answer turns out to be yes: we can improve from to deterministic time, which is fixed-parameter tractable in .

Having said that, we will begin with a very elegant randomized algorithm for Vertex Cover, which essentially picks an edge at random and then, one of its endpoints at random, for as long as it can.

ALG

while has at least one edge:

- pick u.a.r.

- pick u.a.r

- Set

Output

Here are few claims about cute algorithm:

ALGalways runs in polynomial1 time.- is always a vertex cover.

- [ is an optimal vertex cover] .

The first two claims follow quite directly from the operations of the algorithm and the definition of a vertex cover.

What about the third? Well: let OPT be some fixed optimal vertex cover. Suppose OPT. Initially, note that OPT. In each round, [] , since by definition. If OPT in every round of the algorithm, then OPT, which is awesome: and said awesomeness manifests with probability .

Bonus: repeat the algorithm and retain the smallest solution to get an overall constant success probability:

Approximation. Do we expect ALG to be a reasonable approximation? It turns out: yes!

In particular: we will show that the size of the vertex cover output by ALG is at most twice OPT in expectation.

For a graph , define to be the radom variable returning the size of the set output by the algorithm.

For integers define:

where the is taken over all graphs with vertices2 and vertex cover of size .

Now let’s analyze the number . Let be the “worst-case graph” that bears witness to the in the definition of . Run the first step of ALG on . Suppose we choose to pick in this step.

Note that:

- and .

- , in particular we let .

- .

- is a graph on at most vertices with a vertex cover of size

- is a graph on at most vertices with a vertex cover of size

Rearranging terms, we get:

Expanding the recurrence, we have: , as claimed earlier.

Feedback Vertex Set

Now we turn to a problem similar to vertex cover, except that we are “killing cycles” instead of “killing edges”.

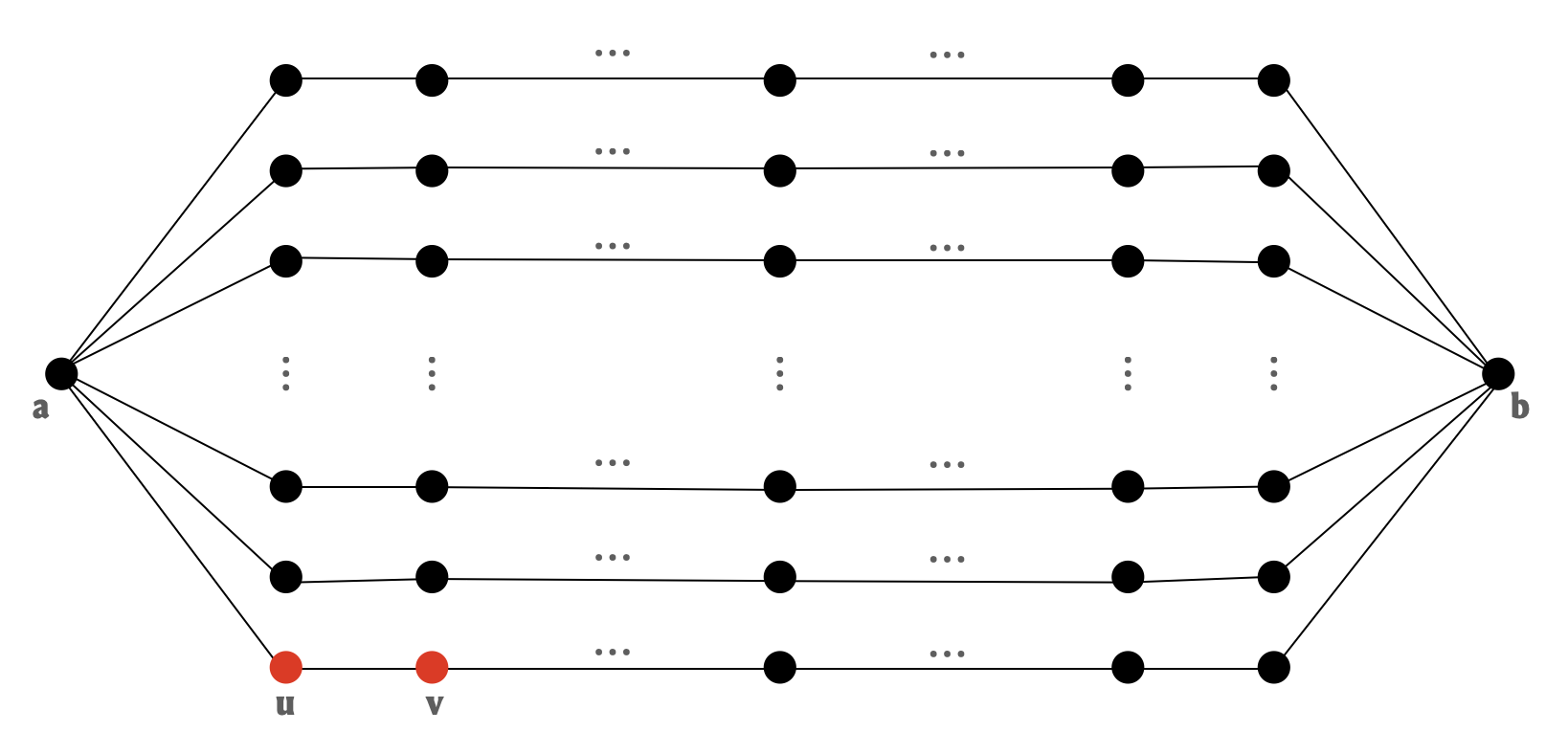

If we try to mimic the cute algorithm from before, we might easily be in trouble: note that the driving observation — that an edge has at least one of its endpoints in the solution with a reasonable enough probability — can fail spectacularly for FVS:

One thing about this example is the large number of pendant vertices sticking out prominently, and these clearly contribute to the badness of the situation. Happily, it turns out that we can get rid of these:

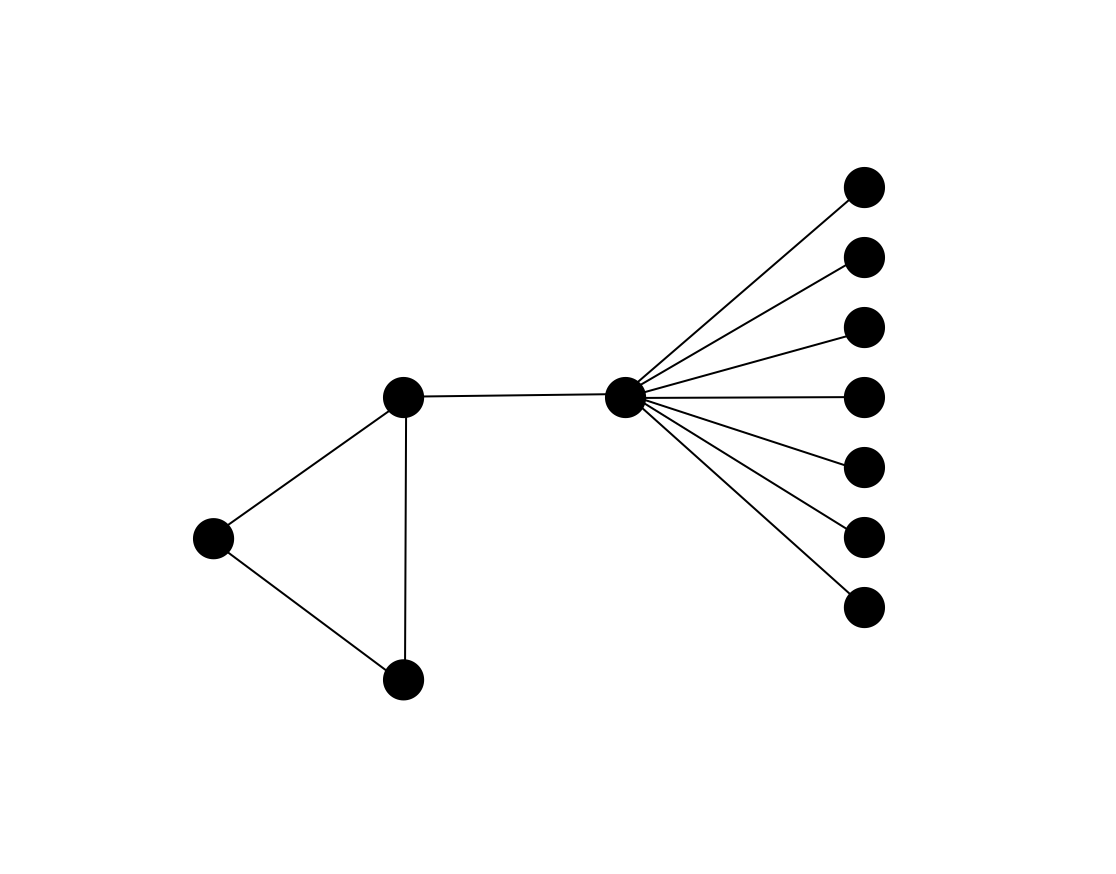

Consider graphs with no pendant vertices and fix an optimal FVS . Is it true that a reasonable fraction of edges are guaranteed to be incident to ? Well… not yet:

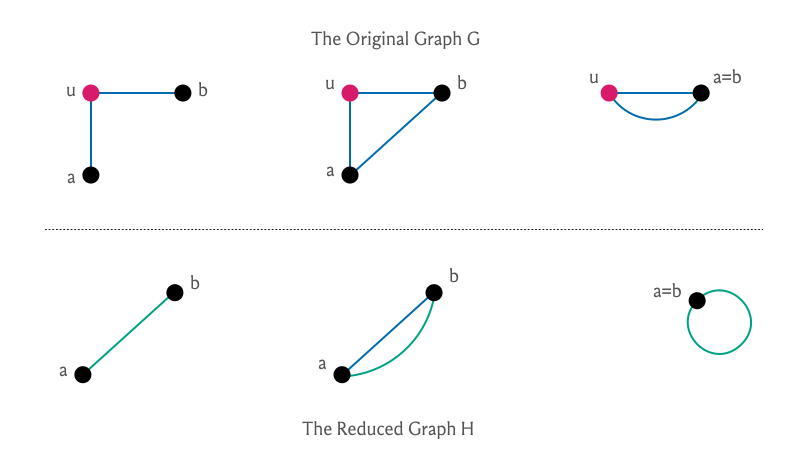

However, continuing our approach of conquering-by-observing-patterns-in-counterexamples, note that the example above has an abundance of vertices that have degree two. Can we get rid of them? Well, isolated and pendant vertices were relatively easy because they don’t even belong to cycles, but that is not quite true for vertices of degree two. Can we still hope to shake them off?

One thing about a degree two vertex is that if there is a cycle that contains it must contain both its neighbors5. So you might suggest that we can ditch and just work with its neighbors instead. This intuition, it turns out, can indeed be formalized:

Let us also get rid of self-loops (because we can):

Now let’s apply Lemmas 1—3 exhaustively, which is to say we keep applying them until none of them are applicable (as opposed to applying them until we feel exhausted ). Once we are truly stuck, we have a graph that is: (a) a graph whose minimum degree is three; and (b) equivalent to the original in the sense that any minimum FVS for can be extended to a minimum FVS of by some time travel: just play back the applications of Lemmas 1—3 in reverse order.

Recall that all this work was to serve our hope for having a cute algorithm for FVS as well. Let’s check in on how we are doing on that front: consider graphs whose minimum degree is three and fix an optimal FVS . Is it true that a reasonable fraction of edges are guaranteed to be incident to ? Or can we come up with examples to demonstrate otherwise?

This is a good place to pause and ponder: play around with examples to see if you can come up with bad cases as before. If you find yourself struggling, it would be for a good reason: we actually now do have the property we were after! Here’s the main claim that we want to make.

We argue this as follows: call an edge good if it has at least one of its endpoints in , and bad otherwise.

We will demonstrate that the number of good edges is at least the number of bad edges: this implies the desired claim.

The bad edges. Let . The bad edges are precisely .

The good edges. Every edge that has one of its endpoints in and the other in is a good edge. Recall that has minimum degree three, because of which:

- for every leaf in , we have at least two good edges, and

- for vertex that is degree two in , we have at least one good edge.

So at this point, it is enough to show that twice the number of leaves and degree two vertices is at least . But this is quite intuitive if we simple stare at the following mildly stronger claim:

which is equivalent to:

After cancelations, we have:

Note that this is true! Intuitively, the inequality is suggesting every branching vertex in a tree pays for at least one leaf — this can be formalized by induction on .

Denote the tree by and remove a leaf to obtain . Apply the induction hypothesis on .

- If the neighbor of in is a degree two vertex, then the number of leaves and high degree vertices are the same in and , so the claim follows directly.

- If the degree of the neighbor in is three in , then both quantities in the inequality for increase by one when we transition from to .

- In the only remaining case, the quantity on the left increases by one when we come to , which bodes well for the inequality.

All this was leading up to cute randomized algorithm v2.0 — i.e, adapted for FVS as follows:

ALG

Preprocess:

- if is acyclic return

- if a self loop RETURN

ALG. - if a degree one vertex RETURN

ALG. - if a degree two vertex RETURN

ALG(c.f. Lemma 2).

Mindeg-3 instance:

- pick an edge u.a.r.

- pick u.a.r.

- RETURN

ALG

This follows from Lemmas 1—3 and induction on .

This follows from Lemmas 1—3, the key lemma, and induction on .

Exact Algorithms

Coming Soon.

Footnotes

(even linear)↩︎

We could have also considered , where the max is over all graphs whose vertex cover is at most : but there are infinitely many graphs that have vertex covers of size at most , and it is not immediate that the max is well-defined, so we restrict ourselves to graphs on vertices that have vertex covers of size at most .↩︎

We allow for more than one edge between a fixed pair of vertices and self-loops.↩︎

A graph without cycles that is not necessarily connected.↩︎

Except when the cycle only contains , i.e, has a self-loop.↩︎